Leveraging IT for business advantage research analysis

Leveraging IT for business Advantage

|

| Research on Leveraging IT for business Advantage |

Leveraging IT

for

Business Advantage

(A Draft)

Table of Contents

Introduction 3

Literature review 6

Discussion 7

Research methodology 16

Conclusion 16

References 18

Complete Article in word format

Introduction

Cloud computing is a term used to explain the usage of hardware and software program added thru network (commonly the Internet). Simply, cloud computing is computing primarily based totally at the internet. In the past, human beings could run programs or packages from software program downloaded on a bodily laptop or server of their building (Metheny, 2013). Cloud computing lets in human beings get right of entry to the equal styles of programs via the internet. Cloud computing is primarily based totally on the basis that the principal computing takes area on a machine, frequently far flung, that isn't the only presently being used (Singh, 2016). Data amassed at some point of this system is saved and processed with the aid of cloud servers. Users can securely get right of entry to cloud offerings the use of credentials acquired from the cloud computing provider (Sarojni, 2016).

Cloud security refers to a range of policies and technologies based on control, built to comply with regulatory compliance rules and protect data applications, infrastructure and information related to the use of cloud computing (Sarojni, 2016). Because the nature of the cloud as a shared store, privacy, access control, and identity management are special concern (Metheny, 2013). With many companies using cloud computing and related cloud providers for data operations, proper security in these operations, and several other capabilities.

Sensitive areas have become a priority for companies contracting with cloud providers Information Technology (Sarojni, 2016). Cloud security processes must address security controls, Cloud providers will integrate to maintain customer privacy, comply with related regulations and data security (Metheny, 2013). As such, this article aims to discuss some key issues data security threats, which cloud providers should consider mitigating to improve customer data security.

Cloud computing is now a global concept used by the majority of Internet users. The number of organizations, businesses, and other individual users that rely on cloud-provided resources and also store important information in the cloud has greatly increased over the years due to the simple features and attractiveness that he possesses (Singh, 2016).

Despite its use, also has some obstacles when it comes to protecting data stored in the cloud. There is currently a major concern raised by cloud users regarding the security of their data transmitted in the cloud (Sarojni, 2016). Highly motivated and skilled hackers are now trying their best to intercept or steal large amounts of data, including important information that has been transmitted or stored in the cloud. Based on hacking motive, various scholars have also come up with many techniques to protect data security during transmission (Metheny, 2013).

Data protection has always been an important issue in statistical technology. In cloud environment, this becomes critical because the statistics are in a single place the same places around the world. Although many cloud computing techniques has been studied by every teacher and industry, the protection of statistics and confidentiality are become more important for the future development of cloud computing technology in authorities, companies and commercial enterprises (Singh, 2016). Information security and privacy Issues related to both hardware and software programming in cloud architecture. Information Security and privacy are the two most important factors that consumers care about cloud technology (Sarojni, 2016).

|

| Research on Leveraging IT for business Advantages |

Cloud computing has emerged as a modern technology that has evolved over the past few years and is seen as the next big thing, years from now. Since it is new, it therefore requires new security issues and has to be faced with challenge too. In recent years, it has grown from a simple concept to become an important part of the computer industry. Cloud computing is widely accepted as virtualization, SOA, and utility computing are adopted (Sarojni, 2016). There are also architectural security issues evolve with different architectural designs operating on Cloud computing.

Distributed computing innovation is perhaps the most encouraging figuring advancements for both cloud suppliers and cloud purchaser (Metheny, 2013). Yet, to best use distributed computing there is a developing need to obstruct the current security openings. Without a doubt the presence of information insurance sanctions and data regulations will assist with expanding the cloud security, as it will be an absolute requirement to cloud suppliers to satisfy this large number of regulations in their security practices and strategies; but it's anything but an unquestionable necessity to have these regulations to have information security in the cloud as the absence of information security will restrict clients of going into the cloud, so regardless of whether there are no data assurance regulations the cloud suppliers actually need to areas of strength for apply security approaches (Singh, 2016).

The sending model influences the degree of safety dangers confronting the cloud clients, as the organization goes from private to public model the security openings increment, these openings incorporate the ordinary hacking dangers, the web and internet browser openings, and the dangers coming about because of dividing stockpiles between multi-occupants (Sarojni, 2016). As the client goes up in the distributed computing level up to the product as an assistance level, the likelihood of being hacked is more noteworthy, each layer adds new security openings and expected chances, serious areas of strength for and strategies ought to be applied by an accomplished security organization group of the cloud supplier.

Cloud data security is a major concern and various methodologies have been proposed, while protecting data security testing in cloud computing, raising the issue of rights private. Problems with data storage, so no important information can be intercepted because of a recent case with Wikileaks, on data security. Cloud computing works in layers because applying policies on top of these layers provides a better security approach to manage security issues (Metheny, 2013). Cloud computing has brought a new horizon for data storage and deployment service. The most important thing about cloud computing is that it gives customers a new way to increase capacity and add functionality to their machines on the go. Before getting started with cloud computing, it is important to understand three concepts:

Cluster computers

Grid calculation

Utility calculator

In Cluster Computing, a cluster represents a group of interconnected local computers that work together towards sole purpose. Instead, grid computing connects large numbers of geographically distributed individual computers which build a massive super infrastructure (Singh, 2016). Utility computing works on a pay-as-you-go model, meaning you pay for what you are accessed and used from a pool of shared resources, e.g., storage systems, software, and servers such as utilities E.g., water, electricity, gas, etc. This document is intended to provide guidance to help manage the benefits and types of cloud computing. The process we follow to complete the development of cloud computing is described, followed by the cloud benefits of computing, cloud computing model, types of clouds, security problems and challenges in cloud computing and cloud computing research from our research (Singh, 2016).

|

| Research on Leveraging IT for business advantage |

Literature review

According to Goyal (2019), data security has become a significant threat to the cloud computing environment. While there are significant privacy and security techniques that can mitigate these threats, there are no good techniques to actively eliminate them. On the other hand, cook et al. (2018) determined that the inclusion of a substantial degree of data encryption, access control, strong authentication, data segregation models, and avoidance of high-value data storage could essentially be accomplished. present to remove additional threats. However, recent cases of sophisticated data leaks show that despite efforts to resolve the leaks, incidents of network vulnerabilities on cloud servers have increased in the United States. greater degree.

Jan de Muijnck Hughes proposed a security technique called Predicate Based Encryption (PBE) in 2011. The PBE represents the A symmetric encryption family and comes from the

Identity Based Encryption. This technique integrates access control based on attribute (ABAC) with asymmetric encryption, thereby allowing an encryption/multi-decryption environment to be implemented using a single schema. This predicate-based encryption centralizes implementation on both Platform as a Service and Software as a Service. This proposed technique also prevents unwanted exposure, leaks, Unsolicited leaks and other unexpected breaches of privacy of data residing in the cloud.

In 2013, Miao Zhou described 5 techniques to ensure data integrity and security in cloud computing. These techniques include; Innovative tree-based key management scheme, Cloud privacy-enhancing data outsourcing, Cloud privacy-protected access control, Privacy-enhancing keywords for cloud search and Public remote verification of the integrity of private data.

This article has applied the keyword search mechanism to enable effective multi-user keyword search and hide personal information in search queries. An encryption scheme for a two-tier system was presented to achieve flexible and granular access control in the cloud. The test results show that the proposed scheme is effective, especially when the data file size is large or the integrity checks are frequent.

In 2014, Aastha Mishra launched an advanced secret shared key management system. The goal of this article is to provide a more reliable decentralized lightweight key management technique for cloud systems to provide more efficient key management and data security in cloud systems. The security and privacy of user data is maintained in the proposed technique by recreating key shares between multiple clouds through the use of secret sharing and the use of shared methods. share know-how to verify the integrity of shares.

In this article, the technique used also provides better security against byzantine errors, server collusion, and data manipulation attacks.

The security and availability of cloud services mainly depends on the APIs related to data access and data encryption in the clouds. Additional research may be done to ensure the security of these APIs and network interfaces (Singh, 2016). New security proposals could address the challenges of protecting services from targeted and accidental attacks and terms of service violations. In addition, layered APIs are more complex when third-party operators use cloud services. Beneficiary owners cannot access the Service. Additionally, malicious insiders are a common threat to cloud services because they violate the terms of service and gain access to information, they don't have permission to. Usually, an employee is a malicious insider who steals confidential information of a company or legitimate users of the company. Internal malicious users can corrupt information, especially in peer-to-peer file sharing systems.

Discussion

The benefits of cloud computing consist of such points.

High Speed – Quick Deployment

Automatic Software Updates and Integration

Efficiency and Cost Reduction

Data Security

Scalability

Collaboration

Unlimited Storage Capacity

Back-up and Restore Data

Disaster Recovery

Mobility

Data Loss Prevention

Control

Competitive Edge

|

| Research on Leveraging IT for business advantage |

While there are many benefits to adopting cloud computing, there are also significant barriers to adoption (Singh, 2016). One of the biggest barriers to adoption is security, followed by compliance, privacy, and legal issues. Since cloud computing represents a relatively new computing paradigm, there is great uncertainty about how to achieve security at all levels (e.g., network, server, application, and data). and how application security is moving to the cloud. This uncertainty has led CIOs to repeatedly state that security is their top concern with cloud computing (Sarojni, 2016).

Security issues relate to areas of risk such as external data storage, dependence on the "public" internet, lack of control, multi-tenancy, and integration with internal security (Metheny, 2013). Compared with traditional technologies, the cloud has many peculiarities, such as its large scale and the fact that the resources owned by the cloud providers are completely distributed, heterogeneous, and completely distributed. Virtualization. Traditional security mechanisms such as identity, authentication, and authorization are no longer sufficient for clouds in their current form (Singh, 2016). Security controls in the cloud are, for the most part, the same as security controls in any computing environment. However, because of the cloud service models used, the operating models, and the technology used to enable cloud services, cloud computing can present different risks to the organization. compared to traditional IT solutions. Unfortunately, building security into these solutions is often seen as making them more rigid.

Migrating mission-critical applications and sensitive data to public cloud environments is a major concern for companies beyond the network of data centers under their control. To alleviate these concerns, cloud solutions providers must ensure that customers continue to have the same security and privacy controls over their applications and services, providing evidence to clients that their organization is secure and that they can meet their service level agreements and that they can demonstrate compliance to the auditors (Sarojni, 2016).

A classification of security issues for Cloud Computing based on the so-called SPI model (SaaS, PaaS and IaaS), identifying the main vulnerabilities of this type of system and the relationships The most important threats are found in the documents related to the Cloud Computing and its environment. A threat is a potential attack that could lead to the misuse of information or resources, and the term vulnerability refers to vulnerabilities in a system that allow a successful attack (Singh, 2016). Some surveys focus on a service model or focus on listing general cloud security issues without distinguishing between vulnerabilities and threats. Here, we present a list of vulnerabilities and threats, and we also show which models of cloud services may be affected (Metheny, 2013). In addition, we describe the relationship between these vulnerabilities and threats; how these vulnerabilities can be exploited to perform an attack and put in place some countermeasures regarding these threats in an attempt to solve or improve the identified problems.

Cloud Computing Platforms

Cloud Computing has many web services platforms like

Amazon Web Services

Google Cloud

Metapod

Microsoft Azure

Cisco

etc.

Two of them are explained below:

Introduction

AWS stands for Amazon Web Services. It is a subsidiary of Amazon. Companies, individuals and governments uses it as a platform and API for cloud computing. This web services of cloud computing includes “abstract technical infrastructure and distributed computing tools and blocks” (aws, 2022). The Amazon Web Services are used all over the globe through server farms. The subscribers pay fees according to their usage, hardware, software, operating system and other features and services. AWS has been very beneficial for conducting businesses and small enterprises. The most preferred option for all new and existing companies is Amazon Web Services.

While Google Cloud platform was established on October 6, 2011, and it turned out to be the best and successful cloud computing service. Google Cloud platform is a medium by which people can easily access a cloud systems and other computing services. Google Cloud platform provides services like Networking, computing, storage, big data, machine learning and management services. Cloud Computing platform consist of several physical assets such as computers, hard disks, and virtual resources. Benefits of Google Cloud Platform includes Better Pricing and Deals, Increased Service and Performances, users can work from anywhere, it provides better and efficient updates, versatile Security Methods.

Models and Services:

AWS:

Amazon Web Services includes over 200 services. It includes application services, analytics, machine learning, networking, developer tools, storage, database, mobile, management, tools for internet, computing, and RobOps (aws, 2020). The most important models of Amazon Web Services include Amazon connect, AWS Lambda, Simple Storage Service and Amazon Elastic Compute Cloud (aws, 2020). Amazon Elastic Compute Cloud provides a virtual cluster of computers that is available all the time on internet. Most of the services are not used by the users directly. They are given functionalities. They are offered through API for developers so that it can be used in applications.

Google Cloud:

Google Cloud Platform has various models according to which it provides services. The three best models per NIST are:

Infrastructure as a Service (IaaS)

Platform as a Service (PaaS), and

Software as a Service (SaaS)

The above three models provide the following services:

Infrastructure as a Service (IaaS)

Applications (Manage by Customer)

Data (Manage by Customer)

Run time (Manage by Customer)

Middle ware (Manage by Customer)

O/S (Manage by Customer)

Virtualization (Manage by Provider)

Networking (Manage by Provider)

Storage (Manage by Provider)

Server (Manage by Provider)

Platform as a Service (PaaS)

Applications (Manage by Customer)

Data (Manage by Customer)

Run time (Manage by Provider)

Middle ware (Manage by Provider)

O/S (Manage by Provider)

Virtualization (Manage by Provider)

Networking (Manage by Provider)

Storage (Manage by Provider)

Server (Manage by Provider)

Software as a Service (SaaS)

Applications (Manage by Provider)

Data (Manage by Provider)

Run time (Manage by Provider)

Middle ware (Manage by Provider)

O/S (Manage by Provider)

Virtualization (Manage by Provider)

Networking (Manage by Provider)

Storage (Manage by Provider)

Server (Manage by Provider)

Resource Virtualization:

AWS:

Amazon Elastic Compute Cloud (EC2) instances are central main part of Amazon Web Services. Basically, it is main part of the platform of cloud computing. It depends on the type of instance that what will be the hardware of the host computer that is being used (aws, 2020). Amazon Web Services uses two distinct types of virtualizations that basically supports Amazon Elastic Compute Cloud instances. These virtualizations are as follows:

PV

It stands for Para Virtualization. Para Virtualization supports only Linux. It shows much better performance than another virtualization as hypervisor can be communicated by guest kernel.

HVM

It stands for Hardware assisted Virtual Machines. It is also known as full virtualization.

Google Cloud:

Google Cloud Platform supports guests imaging running Linux and Microsoft Windows and uses KVM as the hypervisor which uses launch virtual machines based on 64-bit x86 architecture.

Scaling and capacity planning:

AWS:

Costs can be saved by only paying for what one needs. It can be done by capacity planning in which data is collected, analyzed and advanced analysis is performed (aws, 2020). Similarly, scaling plans provides scaling policies. It creates a plan for utilization of resources. Our own strategies can be created in it. Strategies can be separated for different types of resources. Scaling strategies and capacity planning is very useful as it can save cost and utilization of resources.

Google cloud:

Many strategies have been adapted by google cloud as well so that their users can save cost and resources.

Load Balancing:

AWS:

A load balancer is use to “accept incoming traffic from clients and routes requests to its registered targets” (aws, 2020). It has a lot of benefits. It can divide work load among different resources. One of its advantages is that we can add and delete compute resources through lord balancing in amazon web services according to the need of users. It will not have any impact on the overall working of application. Different interfaces on amazon web services are used to create, analyze and manage your lord balancers. These interfaces include AWS Management Console, AWS SDKs, AWS Command Line Interface (AWS CLI), and Query API etc (aws, 2022).

Google Cloud:

Load balancers are controlled offerings on GCP that distribute visitors throughout more than one times of your application. GCP bears the load of handling operational overhead and decreases the danger of getting a non-functional, slow, or overburdened application.

Load Balancing in Google Cloud Platform has 3 categories

Global External HTTP (S) load balancer

Regional External HTTP (S) load balancer

Internal HTTP (S) load balancer

Security:

AWS:

Amazon Web Services claimed to be the securest platform for cloud computing. This platform gives its users an environment where they can run their businesses and companies with full securities. Amazon Web Services data centers and networks are built in such a way that they secure the information related to their users such as their identities, devices and applications. Users do not need to worry about their privacy and security. It gives them the chance to focus on their business. It help them to grow and bring innovation in their businesses (aws, 2022).

Google Cloud:

The Google protection version is constructed from extra than 15 years of revel in targeted on maintaining clients secure on Google products. Google Cloud Platform lets in your packages and statistics to function at the equal relied on protection version that Google constructed for its very own network.

Database Technology (open source and licensed):

AWS:

Amazon Web Services is a platform for cloud computing that supports open source and licensed software. The users and customers can create and run open-source software on amazon web services. Amazon Web Services claims that Open-source software is beneficial for everyone. Hence, they are supporting it. Some of the Amazon Web Services open-source projects include Babel fish for PostgreSQL, EKS distro, Bottle rocket, Open Source, Firecracker, FreeRTOs, AWS Cloud, Development Kit etc. (aws, 2021). There are two licensed models. These are “License included” and “Bring-Your-Open-License” (byol). It is very beneficial as it is fully managed, available, fast, easy to migrate, license flexibility and reliable etc. (aws, 2020).

Google Cloud:

Database which a google cloud platform uses are:

Cloud SQL

Cloud Spanner

Bare Metal Solution for Oracle

Big Query

Cloud Big Table

Fire Store

Fire Base real time data base

Memory Store

Mongo DB Atlas

Google Cloud Partner Services

Privacy Compliance:

AWS:

Amazon Web Services do not compromise with the privacy of their customers and users. The one of the major pros of using Amazon Web Services is that it provides good privacy. It has earned trust of its users. Amazon Web Services is transparent in its privacy commitments. The user’s control, create and manage their data by themselves. The customer commitments of AWS are transparent, and they have raised their standards of data privacy. Users can understand the contracts provided by AWS on data privacy easily as the language used is very simple.

Google Cloud:

Google cloud privacy encourages users to use this platform.

Content Delivery:

AWS:

The content delivery network is an important part of Amazon Web Services. It improves the content of delivery by duplicating common content. It reduces the burden on the origin of application. It helps increase good performance and scaling as well. It creates and mange connections of requesters and keep them secured. Customers now usually use content delivery networks for interactivity and content delivery. One of the content delivery networks include Amazon CloudFront that provides secured and reliable application delivery.

Google Cloud:

Cloud content delivery network (CDN) shops content material regionally at sure edges and works with the HTTP(s) load balancing carrier to supply this content material to users. It is crucial to keep in mind that now no longer all information could be saved at the CDN.

Cloud Management:

AWS:

It helps the users of AWS to enhance their cloud strategy. It gives solutions by managing operations and governance. AWS cloud management tools categories include cloud governance and resource and cost optimization (aws, 2020).

Google Cloud:

Here are the following tools to manage the google cloud platform:

Cloud Endpoints

Cloud Console Mobile App

Cost Management

Intelligent Management

Carbon Footprint

Google Cloud Market Place

Google Cloud Console

Service catalog

Cloud Shell

Cloud APIs

Config Connector

Terraform on Google Cloud

Price/ cost comparison of key services:

AWS:

Many customers find Amazon Web Services prices a bit high for personal use but for the long term after calculating maintenance, electricity etc, it is not that high.

Google Cloud:

While google cloud costs less than AWS. It includes role based support and premium support.

Research methodology

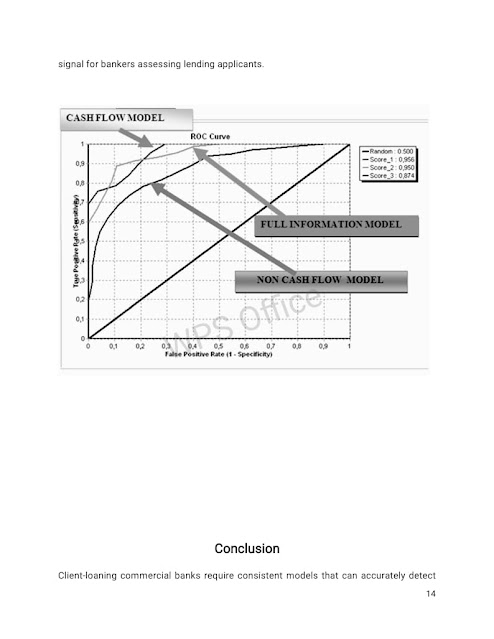

In this study, emphasis will be placed on secondary quantitative analysis in the analyze the relevant dataset, namely “Cybersecurity Breach” extracted from Kaggle with rows of data related to legal entity name, state, company, location, summary and year off. This dataset includes 1055 valid data, which is very helpful in identify critical aspects of network security vulnerabilities (Kaggle.com 2022). Analysis will perform with the help of SPSS to understand the confidence, validity and percentage of the data breach.

Conclusion

Cloud computing is a relatively new concept that has many advantages for users; however, it also has some security issues that can slow down its use. Understanding what gaps exist in cloud computing will help organizations make the transition to the cloud. As Cloud Computing leverages many technologies, it also inherits its security issues. Traditional web applications, data storage, and virtualization have come under scrutiny, but some of the proposed solutions are incomplete or non-existent. We've covered the security issues for cloud models: IaaS, PaaS, and IaaS, which vary by model. As described in this article, storage, virtualization, and networking are the key security concerns of cloud computing. Virtualization allowing multiple users to share a single physical server is one of the major concerns of cloud users. Also, another challenge is that there are many different types of virtualization technologies, and each can address security mechanisms in different ways. Virtual networks are also the target of certain attacks, especially when communicating with remote virtual machines.

In short, cloud computing shows significant potential in delivering easy-to-manage, cost-effective, powerful, and flexible resources over the Internet. These characteristics encourage individual users or organizations to migrate their services and applications to the cloud. However, services offered by third-party cloud providers present an additional security risk, poses a huge data security risk to user privacy. Therefore, organizations and Cloud service providers should take measures to avoid such threats. Cloud computing has a lot future. It is scalable and saves companies a lot of money on infrastructure aside. Therefore, it has become a lucrative option for businesses. This leads to a lot companies allocate multiple budgets for the transition to the cloud. Despite all the security issues, companies are still in the process of deploying their applications in the cloud. References

Metheny, M. (2013). The FedRAMP Cloud Computing Security Requirements. Federal Cloud Computing, 8(12), 241-327. doi:10.1016/b978-1-59-749737-4.00009-5 https://www.sciencedirect.com/book/9781597497374/federal-cloud-computing

Metheny, M. (2017). Security and privacy in public cloud computing. Federal Cloud Computing, 7(8), 79-115. doi:10.1016/b978-0-12-809710-6.00004-4. https://www.sciencedirect.com/book/9780128097106/federal-cloud-computing?utm_content=infosec_PR&utm_medium=pressrls&utm_source=publicity

Singh, B., & K.S., J. (2016). Security Management in Mobile Cloud Computing. IEEE Xplore. https://ieeexplore.ieee.org/abstract/document/7566315

Sudhansu R. L. et.al Enhancing Data Security in Cloud Computing Using RSA Encryption and MD5 Algorithm, International Journal of Computer Science Trends and Technology (IJCST) – Volume 2, Issue 3, June 2014 https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.677.7852&rep=rep1&type=pdf

Aastha Mishra (2014) Data Security in Cloud Computing Based on Advanced Secret Sharing Key Management System, 20 Jan, 2019 [Online]. https://www.ethesis.nitrkl.ac.in/5845/1/212CS2110.pdf

Nesrine Kaaniche (2014) Cloud Data Security based on Cryptographic Mechanisms, 26 Jan, 2019 [Online]. https://www.tel.archives-ouvertes.fr/tel-01146029/document

Afnan U.K. (2014) Data Confidentiality and Risk Management in Cloud Computing 2 Feb, 2019 [Online]. https://www.ethesis.whiterose.ac.uk/13677/1/Thesis_Final_Afnan _27072016_ EngD.pdf

Sarojini G. et.al (2016) Trusted and Reputed Services using Enhanced Mutual Trusted and Reputed Access Control Algorithm in Cloud, 2nd International Conference on Intelligent Computing, Communication & Convergence (ICCC-2016). https://www.researchgate.net/publication/306068888_Trusted_and_Reputed_Services_Using_Enhanced_Mutual_Trusted_and_Reputed_Access_Control_Algorithm_in_Cloud

Bandaru, A. (2010), Amazon Web Services, Research Methods and Professional issues, https://www.researchgate.net/publication/347442916_AMAZON_WEB_SERVICES

ScienceDirect. (2016), Amazon Web Services, https://www.sciencedirect.com/topics/engineering/amazon-web-services

Mirghani, S. (2017), Comparison between Amazon S3 and Google Cloud Drive, https://dl.acm.org/doi/abs/10.1145/3158233.3159371

Aws, AWS Management Tools Competency Categories, https://aws.amazon.com/products/management-tools/partner-solutions/?partner-solutions-cards.sort-by=item.additionalFields.partnerNameLower&partner-solutions-cards.sort-order=asc&awsf.partner-solutions-filter-partner-type=*all&awsf.Filter%20Name%3A%20partner-solutions-filter-partner-use-case=*all&awsf.partner-solutions-filter-partner-location=*all

AWS, A Content delivery network, https://aws.amazon.com/caching/cdn/

Amazon Web Services, https://aws.amazon.com/compliance/data-privacy/

Amazon Web Services, open source at aws, https://aws.amazon.com/opensource/?blog-posts-content-open-source.sort-by=item.additionalFields.createdDate&blog-posts-content-open-source.sort-order=desc

Amazon Web Services, https://aws.amazon.com/rds/oracle/

Amazon Web Services, aws clod security, https://aws.amazon.com/security/

Amazon Web Services, elastic load balancing, https://docs.aws.amazon.com/elasticloadbalancing/latest/userguide/what-is-load-balancing.html

Hohenbrink. G. (2020). GCP 101: An Introduction to Google Cloud Platform. Onix.

https://www.onixnet.com/insights/gcp-101-an-introduction-to-google-cloud-platform#:~:text=Google%20Cloud%20Platform%20(GCP)%20operates,housed%20near%20your%20physical%20location.

Keshari, K. (2021). What is Google Cloud Platform (GCP)? – Introduction to GCP Services & GCP Account. Edureka. https://www.edureka.co/blog/what-is-google-cloud-platform/

Saran, G. Introduction To Google Cloud Platform. WhizLab. https://www.whizlabs.com/blog/google-cloud-platform/

Admin Globaldots (2018). 3 Key Cloud Computing Benefits for Your Business. Globaldots. https://www.globaldots.com/resources/blog/cloud-computing-benefits-7-key-advantages-for-your-business/Google Cloud management tools. Google Cloud. https://cloud.google.com/products/management

Google Cloud Platform – Google Cloud CDN. Devopspoints. https://devopspoints.com/google-cloud-platform-google-cloud-cdn.html

We are IOD (2020). Load Balancing on Google Cloud Platform (GCP): Why and How. Level Up. https://levelup.gitconnected.com/load-balancing-on-google-cloud-platform-gcp-why-and-how-a8841d9b70c

Google Cloud Databases. Google Cloud.

Social Plugin